Creating Effective Visualizations

This section focuses on practical strategies and techniques for designing clear and impactful visualizations using the diverse plotting tools provided in the EDA Toolkit.

Heuristics for Visualizations

When creating visualizations, there are several key heuristics to keep in mind:

Clarity: The visualization should clearly convey the intended information without ambiguity.

Simplicity: Avoid overcomplicating visualizations with unnecessary elements; focus on the data and insights.

Consistency: Ensure consistent use of colors, shapes, and scales across visualizations to facilitate comparisons.

Methodologies

The EDA Toolkit supports the following methodologies for creating effective visualizations:

KDE and Histograms Plots: Useful for showing the distribution of a single variable. When combined, these can provide a clearer picture of data density and distribution.

Feature Scaling and Outliers: Identifying outliers is critical for understanding the data’s distribution and potential anomalies. The EDA Toolkit offers various methods for outlier detection, including enhanced visualizations using box plots and scatter plots.

Stacked Crosstab Plots: These are used to display multiple data series on the same chart, comparing cumulative quantities across categories. In addition to the visual stacked bar plots, the corresponding crosstab table is printed alongside the visualization, providing detailed numerical insight into how the data is distributed across different categories. This combination allows for both a visual and tabular representation of categorical data, enhancing interpretability.

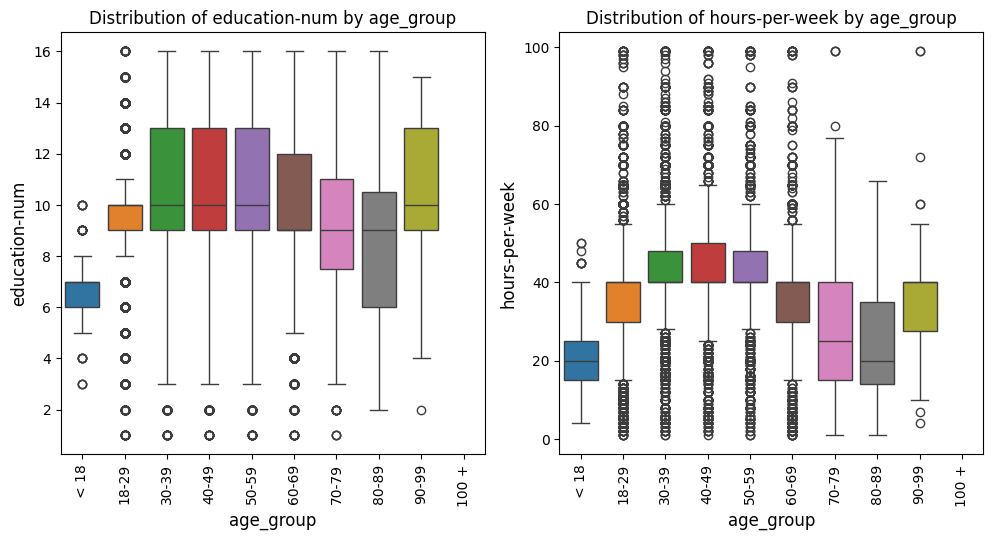

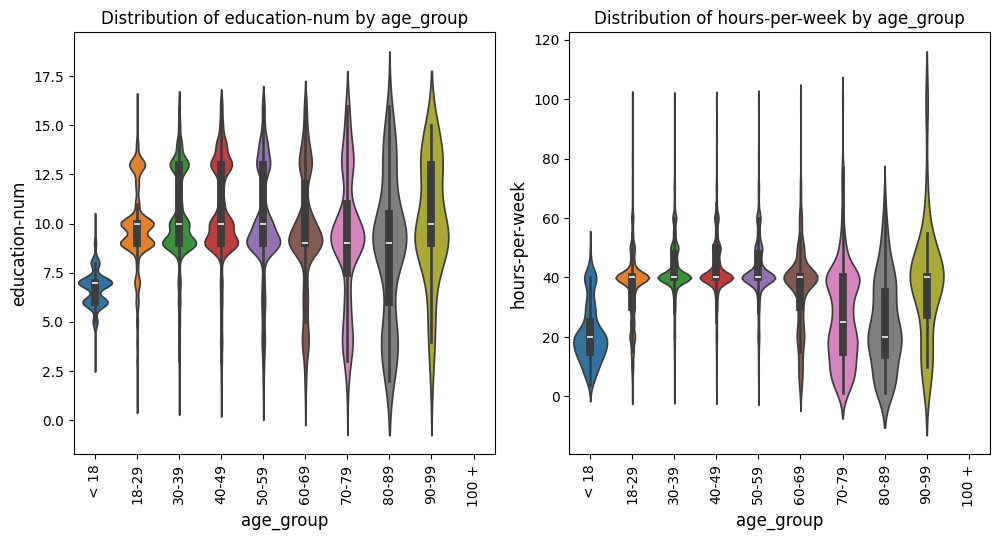

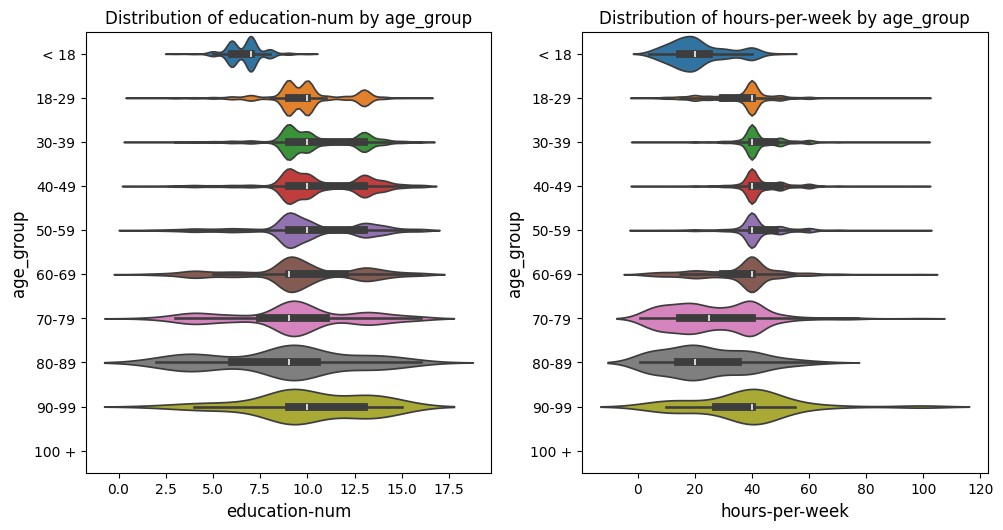

Box and Violin Plots: Useful for visualizing the distribution of data points, identifying outliers, and understanding the spread of the data. Box plots are particularly effective when visualizing multiple categories side by side, enabling comparisons across groups. Violin plots provide additional insights by showing the distribution’s density, giving a fuller picture of the data’s distribution shape.

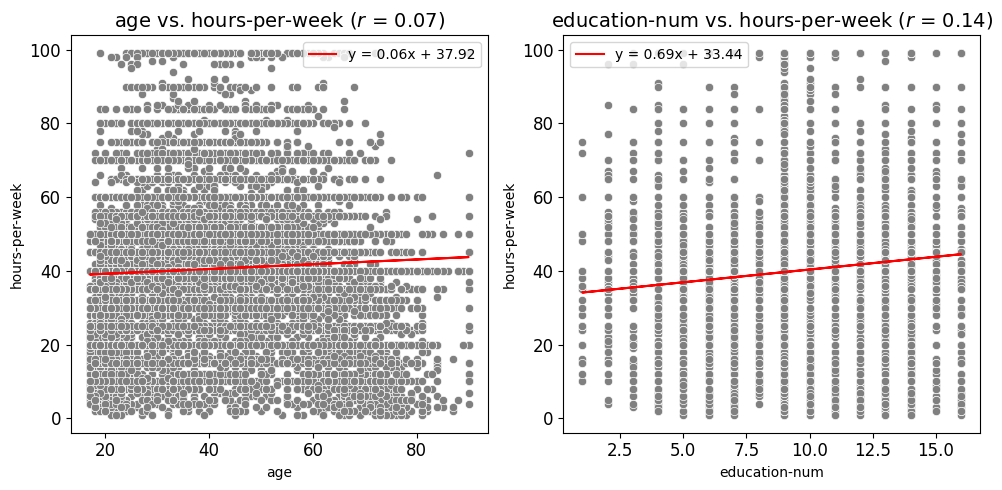

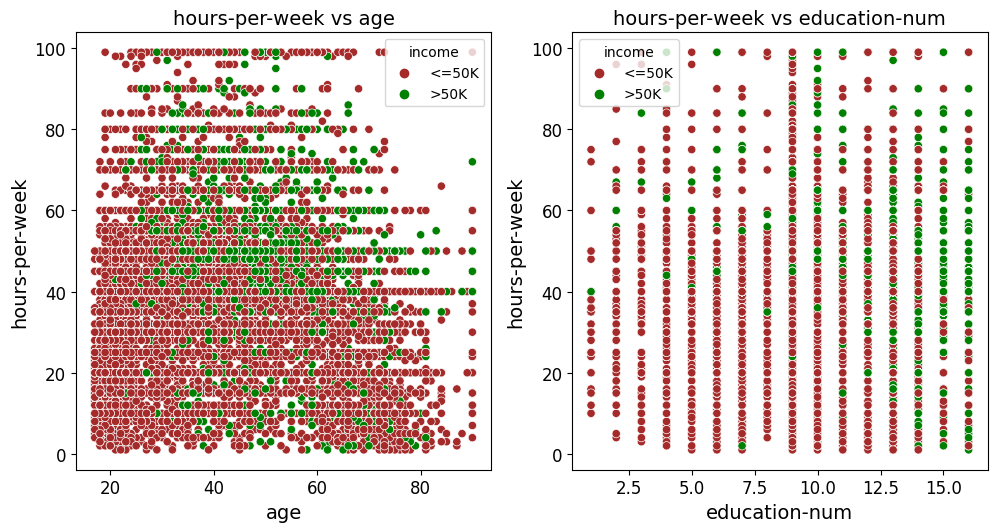

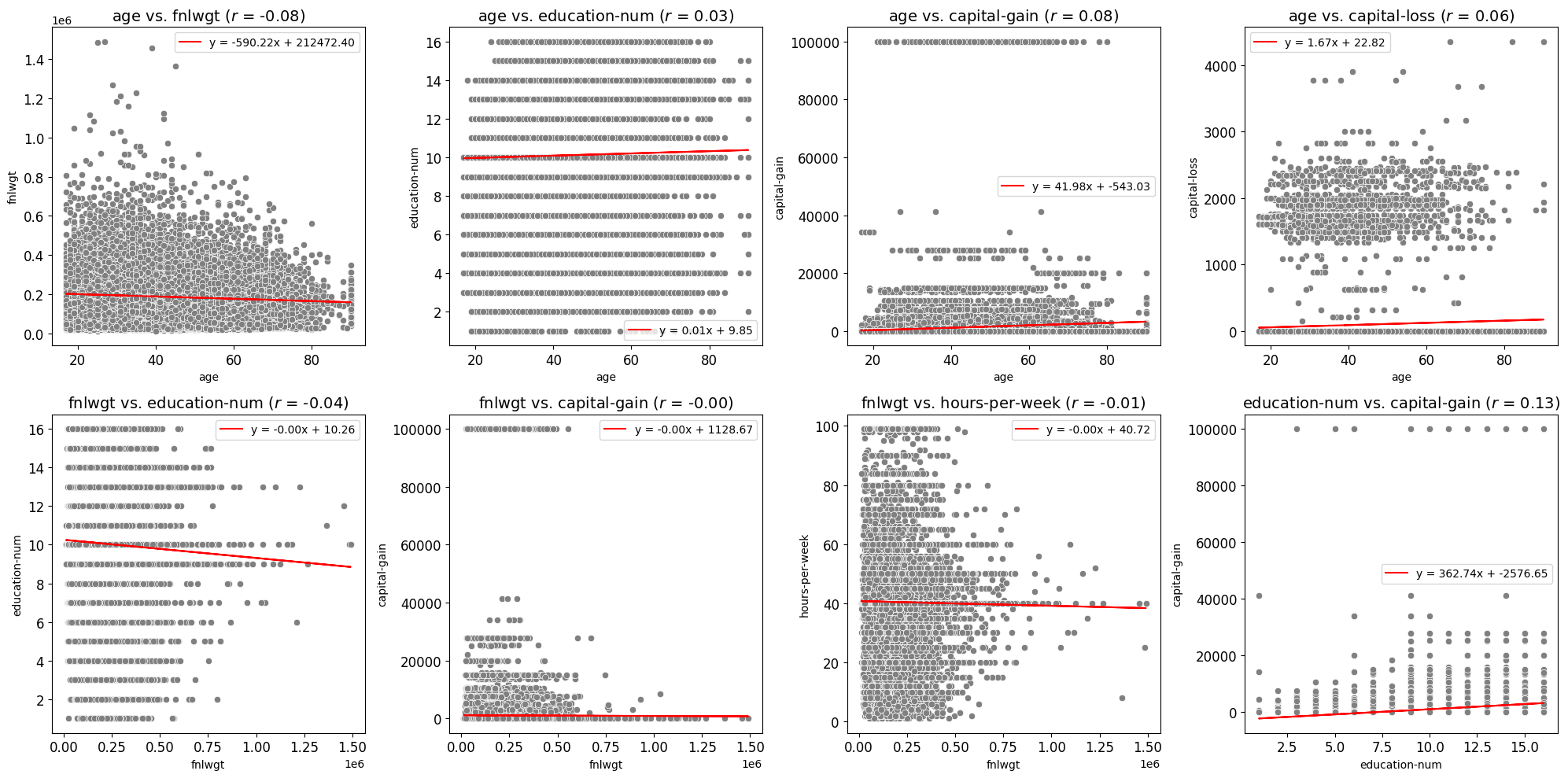

Scatter Plots and Best Fit Lines: Effective for visualizing relationships between two continuous variables. Scatter plots can also be enhanced with regression lines or trend lines to identify relationships more clearly.

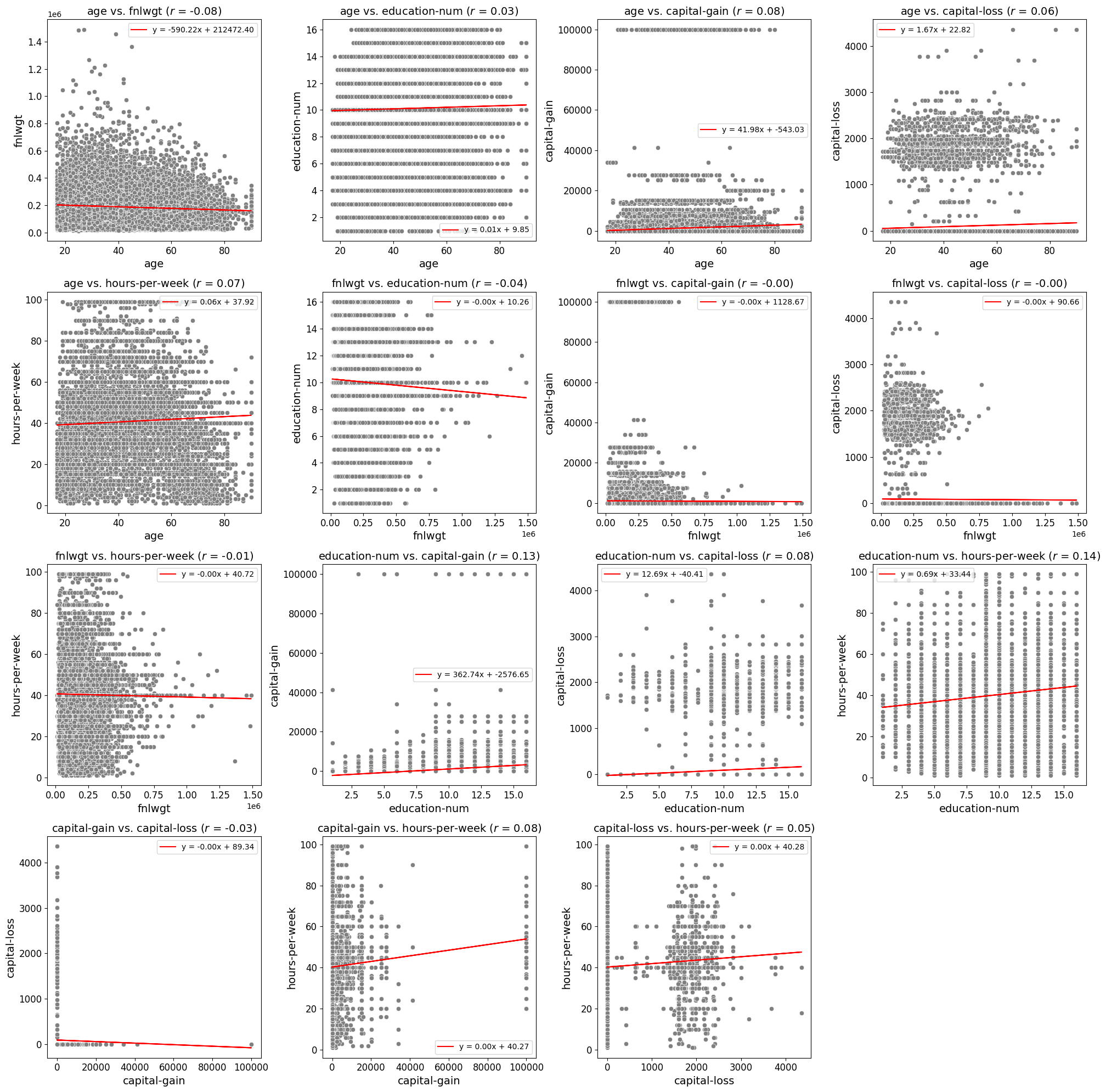

Correlation Matrices: Helpful for visualizing the strength of relationships between multiple variables. Correlation heatmaps use color gradients to indicate the degree of correlation, with options for annotating the values directly on the heatmap.

Partial Dependence Plots: Useful for visualizing the relationship between a target variable and one or more features after accounting for the average effect of the other features. These plots are often used in model interpretability to understand how specific variables influence predictions.

Histogram Distribution Plots

Generate histogram and/or density distribution plots (KDE or parametric) for specified columns in a DataFrame.

The plot_distributions function is a flexible tool designed for visualizing

the distribution of numerical data using histograms, density plots, or a

combination of both. It supports kernel density estimation (KDE) as well as

parametric probability density functions, and provides extensive control over

plot appearance, layout, scaling, and statistical overlays.

This function is intended for exploratory data analysis where understanding the shape, spread, and modality of numeric variables is critical.

Key Features and Parameters

Flexible Plotting: Supports histogram-only, density-only, or combined histogram and density visualizations.

Density Estimation: Density overlays may be rendered using KDE or parametric distributions.

Customization: Fine-grained control over colors, binning, layout, labels, titles, legends, and font sizes.

Scientific Notation Control: Optional disabling of scientific notation on axes for improved readability.

Log Scaling: Supports selective log scaling of variables that span multiple orders of magnitude.

Output Options: Supports saving combined figures or per-variable plots in PNG or SVG format.

- plot_distributions(df, vars_of_interest=None, figsize=(5, 5), subplot_figsize=None, hist_color='#0000FF', density_color=None, mean_color='#000000', median_color='#000000', hist_edgecolor='#000000', hue=None, fill=True, fill_alpha=1, n_rows=None, n_cols=None, w_pad=1.0, h_pad=1.0, image_path_png=None, image_path_svg=None, image_filename=None, bbox_inches=None, single_var_image_filename=None, y_axis_label='Density', plot_type='hist', log_scale_vars=None, bins='auto', binwidth=None, label_fontsize=10, tick_fontsize=10, text_wrap=50, disable_sci_notation=False, stat='density', xlim=None, ylim=None, plot_mean=False, plot_median=False, std_dev_levels=None, std_color='#808080', label_names=None, show_legend=True, legend_loc='best', custom_xlabels=None, custom_titles=None, **kwargs)

- Parameters:

df (pandas.DataFrame) – The DataFrame containing the data to plot.

vars_of_interest (list of str, optional) – List of column names for which to generate distribution plots. If

'all', plots will be generated for all numeric columns.figsize (tuple of int, optional) – Size of each individual plot, default is

(5, 5).subplot_figsize (tuple of int, optional) – Size of the overall grid of subplots when multiple plots are generated.

hist_color (str, optional) – Color of the histogram bars.

density_color (str, optional) – Color of the density plot. If

None, Matplotlib’s default line color is used.mean_color (str, optional) – Color of the mean line if

plot_meanis True.median_color (str, optional) – Color of the median line if

plot_medianis True.hist_edgecolor (str, optional) – Color of the histogram bar edges.

hue (str, optional) – Column name to group data by.

fill (bool, optional) – Whether to fill the histogram bars with color.

fill_alpha (float, optional) – Alpha transparency for histogram fill.

n_rows (int, optional) – Number of rows in the subplot grid.

n_cols (int, optional) – Number of columns in the subplot grid.

w_pad (float, optional) – Width padding between subplots.

h_pad (float, optional) – Height padding between subplots.

image_path_png (str, optional) – Directory path to save PNG images.

image_path_svg (str, optional) – Directory path to save SVG images.

image_filename (str, optional) – Filename to use when saving combined plots.

bbox_inches (str, optional) – Bounding box used when saving figures.

single_var_image_filename (str, optional) – Filename prefix used when saving per-variable plots.

y_axis_label (str, optional) – Label displayed on the y-axis.

plot_type (str, optional) – Type of plot to generate (

'hist','density', or'both').log_scale_vars (str or list of str, optional) – Variable name(s) to apply log scaling.

bins (int or sequence, optional) – Histogram bin specification.

binwidth (float, optional) – Width of histogram bins.

label_fontsize (int, optional) – Font size for axis labels and titles.

tick_fontsize (int, optional) – Font size for tick labels.

text_wrap (int, optional) – Maximum width before wrapping labels or titles.

disable_sci_notation (bool, optional) – Disable scientific notation on axes.

stat (str, optional) – Aggregate statistic computed per bin.

plot_mean (bool, optional) – Plot the mean as a vertical line.

plot_median (bool, optional) – Plot the median as a vertical line.

std_dev_levels (list of int, optional) – Standard deviation levels to plot.

std_color (str or list of str, optional) – Color(s) for standard deviation lines.

label_names (dict, optional) – Custom labels for variables.

show_legend (bool, optional) – Whether to display the legend.

legend_loc (str, optional) – Location of the legend.

custom_xlabels (dict, optional) – Custom x-axis labels per variable.

custom_titles (dict, optional) – Custom titles per variable.

kwargs (additional keyword arguments) – Additional keyword arguments passed to the plotting backend.

- Raises:

If

plot_typeis not one of'hist','density', or'both'.If

statis not one of'count','frequency','probability','proportion','percent', or'density'.If

log_scale_varscontains variables that are not present in the DataFrame.If

fillis set toFalseandhist_edgecolororfill_alphais specified.If

binsandbinwidthare both specified.If

subplot_figsizeis provided when only one plot is being created.

If both

binsandbinwidthare specified, which may affect performance.

- Returns:

None

Notes

If you do not set n_rows or n_cols, the function will automatically

calculate an optimal subplot layout based on the number of variables being

plotted.

To save images, at least one of image_path_png or image_path_svg must

be specified. Saving is triggered by providing image_filename or

single_var_image_filename.

Deprecation Notice

Warning

kde_distributions is deprecated and will be removed in a future release.

Please use plot_distributions instead. The deprecated function is

retained only as a thin wrapper for backward compatibility.

If you continue to use kde_distributions, you will receive a

DeprecationWarning indicating that the function is deprecated.

The following summarizes the key changes from kde_distributions to

plot_distributions:

Updates

Function rename and deprecation

kde_distributionsis now deprecated and serves only as a wrapper.plot_distributionsis the new canonical API.Density plotting is no longer limited to KDE.

Added support for parametric density overlays using distributions from

scipy.stats.New parameters introduced:

density_functionto specify KDE and/or parametric distributions.density_fitto control the fitting method ('MLE'or'MM').

kde_colorhas been replaced bydensity_color.If

density_color=None, density overlays use Matplotlib’s default line color (typically the standard Python blue,C0).plot_typenow supports'hist','density', or'both'.Density plots may consist of KDE, parametric fits, or a combination.

Parameter ordering, naming conventions, and documentation style closely follow the original

kde_distributionsAPI.Existing user code calling

kde_distributionscontinues to work without modification.

KDE and Histograms Example

In the example below, the plot_distributions function is used to generate

histograms for several variables of interest: "age", "education-num",

and "hours-per-week". These variables represent different demographic and

work-related attributes from the dataset. The plot_type="both" parameter

ensures that density curves are overlaid on the histograms, providing a

smoothed representation of each variable’s distribution.

By default, density overlays are rendered using kernel density estimation (KDE).

In this example, the density curves are explicitly colored red using

density_color="red". If no density color is provided, the function falls

back to Matplotlib’s default line color.

The visualizations are arranged in a single row of three columns, as specified

by n_rows=1 and n_cols=3. The overall size of the subplot grid is set to

14 inches wide and 4 inches tall using subplot_figsize=(14, 4), ensuring that

each distribution is displayed clearly without overcrowding.

The fill=True parameter fills the histogram bars with color, while

fill_alpha=0.60 applies partial transparency to allow the density overlays

to remain visible. Spacing between subplots is controlled automatically, with

the figure layout tightened using bbox_inches="tight" when rendering.

To handle longer titles and labels, the text_wrap=50 parameter ensures that

text wraps cleanly onto multiple lines after 50 characters. The variables listed

in vars_of_interest are passed directly to the function and plotted in the

order provided.

The y-axis for all plots is labeled as "Density" via y_axis_label="Density",

reflecting that both the histogram heights and the density curves represent

probability density. The histograms are divided into 10 bins (bins=10),

providing a clear and interpretable view of each distribution.

Finally, the font sizes for axis labels and tick labels are set to 16 points

(label_fontsize=16) and 14 points (tick_fontsize=14), respectively,

ensuring that all text elements remain legible and presentation-ready.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

"education-num",

"hours-per-week",

]

plot_distributions(

df=df,

n_rows=1,

n_cols=3,

subplot_figsize=(14, 4),

fill=True,

fill_alpha=0.60,

text_wrap=50,

density_color="red",

bbox_inches="tight",

vars_of_interest=vars_of_interest,

y_axis_label="Density",

bins=10,

plot_type="both",

label_fontsize=16,

tick_fontsize=14,

)

Histogram Example (Density)

In this example, the plot_distributions() function is used to generate histograms for

the variables "age", "education-num", and "hours-per-week" but with

plot_type="hist", meaning no KDE plots are included—only histograms are displayed.

The plots are arranged in a single row of four columns (n_rows=1, n_cols=3),

with a subplot grid size of 14x4 inches (subplot_figsize=(14, 4)). The histograms are

divided into 10 bins (bins=10), and the y-axis is labeled “Density” (y_axis_label="Density").

Font sizes for the axis labels and tick labels are set to 16 and 14 points,

respectively, ensuring clarity in the visualizations. This setup focuses on the

histogram representation without the KDE overlay.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

"education-num",

"hours-per-week",

]

plot_distributions(

df=df,

n_rows=1,

n_cols=3,

subplot_figsize=(14, 4),

fill=True,

text_wrap=50,

bbox_inches="tight",

vars_of_interest=vars_of_interest,

y_axis_label="Density",

bins=10,

plot_type="hist",

label_fontsize=16,

tick_fontsize=14,

)

Histogram Example (Count)

In this example, the plot_distributions() function is modified to generate histograms

with a few key changes. The hist_color is set to “orange”, changing the color of the

histogram bars. The y-axis label is updated to “Count” (y_axis_label="Count"),

reflecting that the histograms display the count of observations within each bin.

Additionally, the stat parameter is set to "Count" to show the actual counts instead of

densities. The rest of the parameters remain the same as in the previous example,

with the plots arranged in a single row of four columns (n_rows=1, n_cols=3),

a subplot grid size of 14x4 inches, and a bin count of 10. This setup focuses on

visualizing the raw counts in the dataset using orange-colored histograms.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

"education-num",

"hours-per-week",

]

plot_distributions(

df=df,

n_rows=1,

n_cols=3,

subplot_figsize=(14, 4),

text_wrap=50,

hist_color="orange",

bbox_inches="tight",

vars_of_interest=vars_of_interest,

y_axis_label="Count",

bins=10,

plot_type="hist",

stat="Count",

label_fontsize=16,

tick_fontsize=14,

)

Histogram Example - (Mean and Median)

In this example, the plot_distributions() function is customized to generate

histograms that include mean and median lines. The mean_color is set to "blue"

and the median_color is set to "black" (default), allowing for a clear distinction

between the two statistical measures. The function parameters are adjusted to

ensure that both the mean and median lines are plotted (plot_mean=True, plot_median=True).

The y_axis_label remains "Density", indicating that the histograms

represent the density of observations within each bin. The histogram bars are

colored using hist_color="brown", with a fill_alpha=0.60 while the s

tatistical overlays enhance the interpretability of the data. The layout is

configured with a single row and multiple columns (n_rows=1, n_cols=3), and

the subplot grid size is set to 15x5 inches. This example highlights how to visualize

central tendencies within the data using a histogram that prominently displays

the mean and median.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

"education-num",

"hours-per-week",

]

plot_distributions(

df=df,

n_rows=1,

n_cols=3,

subplot_figsize=(14, 4),

text_wrap=50,

hist_color="brown",

bbox_inches="tight",

vars_of_interest=vars_of_interest,

y_axis_label="Density",

bins=10,

fill_alpha=0.60,

plot_type="hist",

stat="Density",

label_fontsize=16,

tick_fontsize=14,

plot_mean=True,

plot_median=True,

mean_color="blue",

)

Histogram Example - (Mean, Median, and Std. Deviation)

In this example, the plot_distributions() function is customized to generate

a histogram that include mean, median, and 3 standard deviation lines. The

mean_color is set to "blue" and the median_color is set to "black",

allowing for a clear distinction between these two central tendency measures.

The function parameters are adjusted to ensure that both the mean and median lines

are plotted (plot_mean=True, plot_median=True). The y_axis_label remains

"Density", indicating that the histograms represent the density of observations

within each bin. The histogram bars are colored using hist_color="brown",

with a fill_alpha=0.40, which adjusts the transparency of the fill color.

Additionally, standard deviation bands are plotted using colors "purple",

"green", and "silver" for one, two, and three standard deviations, respectively.

The layout is configured with a single row and multiple columns (n_rows=1, n_cols=3),

and the subplot grid size is set to 15x5 inches. This setup is particularly useful for

visualizing the central tendencies within the data while also providing a clear

view of the distribution and spread through the standard deviation bands. The

configuration used in this example showcases how histograms can be enhanced with

statistical overlays to provide deeper insights into the data.

Note

You have the freedom to choose whether to plot the mean, median, and standard deviation lines. You can display one, none, or all of these simultaneously.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

]

plot_distributions(

df=df,

figsize=(10, 6),

text_wrap=50,

hist_color="brown",

bbox_inches="tight",

vars_of_interest=vars_of_interest,

y_axis_label="Density",

bins=10,

fill_alpha=0.40,

plot_type="both",

stat="Density",

density_color="red",

label_fontsize=16,

tick_fontsize=14,

plot_mean=True,

plot_median=True,

mean_color="blue",

std_dev_levels=[

1,

2,

3,

],

std_color=[

"purple",

"green",

"silver",

],

)

KDE and Parametric Density Fit Example

In the example below, the plot_distributions function is used to visualize the

distribution of several numeric variables, including "age",

"education-num", and "hours-per-week".

The plot_type="both" parameter instructs the function to render both

histograms and density overlays on the same axes. In this case, the density

overlays include a combination of kernel density estimation (KDE) and two

parametric probability density functions, a normal distribution

("norm") and a log-normal distribution ("lognorm"), specified via

density_function=["kde", "norm", "lognorm"].

Each density overlay is rendered in a distinct color, controlled by

density_color=["blue", "black", "red"], allowing the KDE, normal fit, and

log-normal fit to be visually distinguished. The parametric distributions are

fit using maximum likelihood estimation (density_fit="MLE"), providing a

statistically principled comparison between empirical density and theoretical

models.

Note

For a detailed mathematical discussion of kernel density estimation, parametric density models, and parameter estimation via the Method of Moments (MoM) and Maximum Likelihood Estimation (MLE), see Parameter Estimation for Density Models and Distribution GOF Plots.

from eda_toolkit import plot_distributions

vars_of_interest = [

"age",

]

plot_distributions(

df=df,

vars_of_interest=vars_of_interest,

# layout

n_rows=1,

n_cols=3,

hue=None,

hist_color="yellow",

figsize=(10, 6),

plot_type="both",

stat="density",

density_function=["kde", "norm", "lognorm"],

density_color=["blue", "black", "red"],

density_fit="MLE",

bins=10,

fill=True,

y_axis_label="Density",

text_wrap=50,

label_fontsize=16,

tick_fontsize=14,

legend_loc="best",

bbox_inches="tight",

image_filename="age_distribution_norm_fit",

image_path_png=image_path_png,

image_path_svg=image_path_svg,

)

Grouped Distributions

Plot grouped distributions of numeric features given a binary variable.

The grouped_distributions function visualizes how selected numeric features

are distributed grouped by a binary grouping variable (for example, an

outcome label or class membership). For each feature, the distributions of the

two groups are overlaid on the same axis, either as histograms or as filled

kernel density curves.

This function is designed for exploratory analysis, subgroup comparison, and fairness inspection, where understanding distributional differences between two groups is critical.

Supported plot styles:

"hist": Overlaid histograms using shared or independent bins"density": Overlaid filled kernel density estimates (always normalized)

Normalization behavior:

normalize="count": Raw counts (histograms only)normalize="density": Probability density (histograms or density plots)

- grouped_distributions(df, features, by, *, bins=30, normalize='density', plot_style='hist', alpha=0.6, colors=None, n_rows=None, n_cols=None, common_bins=True, show_legend=True, legend_loc='best', label_fontsize=12, tick_fontsize=10, text_wrap=50, figsize=(10, 6), image_path_png=None, image_path_svg=None, image_filename=None)

- Parameters:

df (pandas.DataFrame) – Input DataFrame containing the features and grouping variable.

features (list of str) – List of numeric feature column names to plot.

by (str) – Name of the binary column used to condition the distributions. The column must contain exactly two unique, non-null values.

bins (int or sequence, optional) – Number of bins or explicit bin edges for histogram plots. Default is

30.normalize (str, optional) – Controls histogram normalization. Options are

"density"or"count". Ignored for density plots, which always use probability density.plot_style (str, optional) – Plot type to generate. Options are

"hist"or"density". Default is"hist".alpha (float, optional) – Transparency level for histogram bars or density fills. Default is

0.6.colors (dict, optional) – Mapping from group value to color. Keys must match the two unique values in the

bycolumn. IfNone, a default color scheme is used.n_rows (int, optional) – Number of subplot rows. If

None, determined automatically.n_cols (int, optional) – Number of subplot columns. If

None, determined automatically.common_bins (bool, optional) – If

True, both groups share identical histogram bin edges. Ignored for density plots.show_legend (bool, optional) – Whether to display a legend identifying the two groups. Default is

True.legend_loc (str, optional) – Legend placement passed directly to Matplotlib. Default is

"best".label_fontsize (int, optional) – Font size for axis labels and titles.

tick_fontsize (int, optional) – Font size for tick labels and legend text.

text_wrap (int, optional) – Maximum character width before wrapping titles.

figsize (tuple of int, optional) – Size of the overall figure in inches. Default is

(10, 6).image_path_png (str, optional) – Directory path to save the figure as a PNG file.

image_path_svg (str, optional) – Directory path to save the figure as an SVG file.

image_filename (str, optional) – Base filename (without extension) for saving the figure.

- Raises:

If the

bycolumn is not binary.If

plot_styleis not one of"hist"or"density".If

normalizeis not one of"density"or"count".If

plot_style="density"andnormalize!="density".If

image_filenameis provided but neitherimage_path_pngnorimage_path_svgis specified.If the subplot grid is too small for the number of features.

- Returns:

None

Notes

All grouped distributions are plotted as overlays on a single axis per feature.

Density plots always represent probability density and do not support count-based normalization.

When

common_bins=True, histogram bin edges are computed jointly across both groups to enable direct shape comparison.Font sizes are specified in absolute points and may appear small on large figures or dense subplot grids.

Grouped Density Plot Example

In the example below, the grouped_distributions function is used to compare

the distributions of several numeric features conditioned on income category.

The grouping variable "income" is binary ("<=50K" vs ">50K"), making

it suitable for grouped overlay plots.

The selected features include demographic and financial attributes:

"age""education-num""hours-per-week"

The plot_style="density" option instructs the function to render filled

kernel density estimates for each group rather than histograms. Density plots

are always normalized, allowing direct comparison of distributional shape even

when group sizes differ substantially.

Custom colors are explicitly provided via the colors argument to ensure

consistent visual identification of income groups across all features. The

alpha=0.6 parameter applies partial transparency, making overlapping density

regions easier to interpret.

The resulting figure is saved in both PNG and SVG formats using a shared base filename.

from eda_toolkit.plots import grouped_distributions

features = [

"age",

"education-num",

"hours-per-week",

]

group_colors = {

"<=50K": "black", # black

">50K": "orange", # orange

}

grouped_distributions(

df=df,

features=features,

by="income",

bins=30,

colors=group_colors,

n_rows=1,

normalize="density",

alpha=0.6,

figsize=(14, 4),

plot_style="density",

image_path_png=image_path_png,

image_path_svg=image_path_svg,

image_filename="grouped_distributions_adult_income",

label_fontsize=16,

tick_fontsize=14,

)

Grouped Histogram Plot Example

In this example, the grouped_distributions function is used to visualize

overlaid grouped histograms for several numeric features, grouped by

income category. As in the previous example, the grouping variable "income"

is binary ("<=50K" vs ">50K"), enabling direct comparison between the two

subpopulations.

Unlike density-based plots, this example uses

plot_style="hist", which renders binned histograms rather than smoothed

kernel density estimates. This representation emphasizes the empirical mass

and frequency structure of each feature, making it easier to identify discrete

concentration, skewness, and potential outliers.

The histograms are normalized to probability density

(normalize="density"), ensuring that the total area under each group’s

histogram integrates to one. This allows meaningful comparison of distribution

shapes even when the two income groups differ in sample size.

A shared binning strategy is used by default, ensuring that both income groups are evaluated against identical bin edges for each feature. Custom group colors are applied consistently across all subplots to maintain interpretability.

The resulting figure is saved in both PNG and SVG formats for downstream use in reports or publications.

from eda_toolkit.plots import grouped_distributions

features = [

"age",

"education-num",

"hours-per-week",

]

group_colors = {

"<=50K": "black",

">50K": "orange",

}

grouped_distributions(

df=df,

features=features,

by="income",

bins=30,

n_rows=1,

colors=group_colors,

normalize="density",

alpha=0.6,

figsize=(14, 4),

plot_style="hist",

image_path_png=image_path_png,

image_path_svg=image_path_svg,

image_filename="cond_histograms_adult_income_hist",

label_fontsize=16,

tick_fontsize=14,

)

Distribution Goodness-of-Fit Plots

Generate goodness-of-fit (GOF) diagnostic plots to compare empirical data against fitted theoretical distributions or an empirical reference sample.

The distribution_gof_plots function provides distributional diagnostics to

evaluate how well one or more candidate probability distributions fit a variable

from a DataFrame. It supports both theoretical (parametric) comparisons, where

distribution parameters are estimated via Maximum Likelihood Estimation (MLE) or

Method of Moments (MM), and empirical QQ comparisons, where sample quantiles are

compared directly to a reference dataset.

The function currently supports the following diagnostic visualizations:

Quantile-Quantile (QQ) plots for assessing agreement between quantiles

CDF-based plots, including optional exceedance (survival) curves via tail control

Note

For a detailed mathematical discussion of kernel density estimation, parametric density models, and parameter estimation via the Method of Moments (MoM) and Maximum Likelihood Estimation (MLE), see Parameter Estimation for Density Models and Distribution GOF Plots.

- distribution_gof_plots(df, var, dist='norm', fit_method='MLE', plot_types=('qq', 'cdf'), qq_type='theoretical', reference_data=None, scale='linear', tail='both', figsize=(6, 5), xlim=None, ylim=None, show_reference=True, label_fontsize=10, tick_fontsize=10, legend_loc='best', text_wrap=50, palette=None, image_path_png=None, image_path_svg=None, image_filename=None)

Generate goodness-of-fit (GOF) diagnostic plots for comparing empirical data to theoretical or empirical reference distributions.

- Parameters:

df (pandas.DataFrame) – Input DataFrame containing the data.

var (str) – Column name in

dfto evaluate.dist (str or list of str, optional) – Distribution name(s) to evaluate. Each entry must correspond to a valid continuous distribution in

scipy.stats(e.g.,"norm","lognorm","gamma").fit_method ({"MLE", "MM"}, optional) – Parameter estimation method for theoretical distributions. Options are: -

"MLE": maximum likelihood estimation -"MM": method of momentsplot_types (str or list of {"qq", "cdf"}, optional) – Diagnostic plot type(s) to generate. Options are: -

"qq": quantile-quantile plot -"cdf": cumulative distribution function plot (with exceedance behavior controlled bytail)qq_type ({"theoretical", "empirical"}, optional) – Type of QQ plot to generate: -

"theoretical": sample quantiles vs fitted theoretical distribution -"empirical": sample quantiles vs reference empirical distributionreference_data (numpy.ndarray, optional) – Reference empirical sample used when

qq_type="empirical". Required for empirical QQ plots and ignored otherwise. Must contain at least two observations.scale ({"linear", "log"}, optional) – Scale applied to the y-axis of applicable plots.

tail ({"lower", "upper", "both"}, optional) – Tail behavior for CDF-based plots: -

"lower": plot CDF only -"upper": plot exceedance probability (1 - CDF) only -"both": plot both CDF and exceedance curvesfigsize (tuple of int, optional) – Base figure size (width, height) for each diagnostic plot. When multiple plot types are requested, the total width scales by the number of plots.

xlim (tuple of (float, float), optional) – Limits for the x-axis as (min, max). Applied after all distributions are drawn to ensure consistent scaling.

ylim (tuple of (float, float), optional) – Limits for the y-axis as (min, max). Applied after all distributions are drawn to ensure consistent scaling.

show_reference (bool, optional) – Whether to draw a reference (identity) line on theoretical QQ plots. Ignored for empirical QQ plots.

label_fontsize (int, optional) – Font size for axis labels and titles.

tick_fontsize (int, optional) – Font size for tick labels.

legend_loc (str, optional) – Legend placement passed to Matplotlib (e.g.,

"best","upper right","lower left").text_wrap (int, optional) – Maximum character width for wrapping titles and labels.

palette (dict of {str: str}, optional) – Mapping from distribution name to color. Keys must match the entries in

dist.image_path_png (str, optional) – Directory path for saving PNG output.

image_path_svg (str, optional) – Directory path for saving SVG output.

image_filename (str, optional) – Base filename used when saving plots (without extension).

- Raises:

If

plot_typescontains invalid values (valid:"qq","cdf").If

qq_typeis not one of"theoretical"or"empirical".If

scaleis not one of"linear"or"log".If

tailis not one of"lower","upper", or"both".If

paletteis provided but does not define colors for every distribution indist.If

qq_type="empirical"is used without validreference_data(must be provided and contain at least two observations).If

image_filenameis provided but neitherimage_path_pngnorimage_path_svgis specified.

- Returns:

None

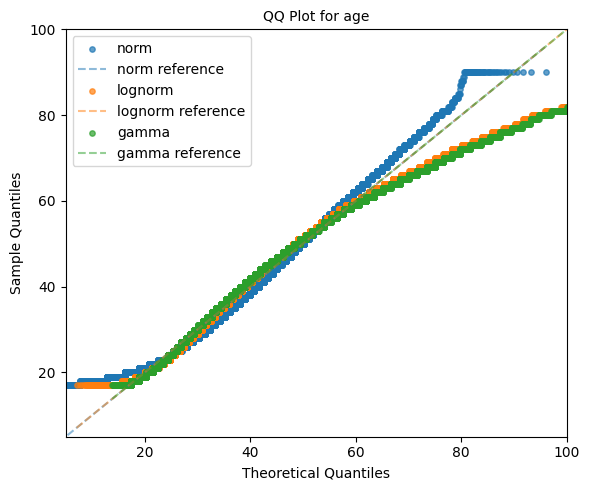

Theoretical QQ Plot Example

In the example below, the distribution_gof_plots function is used to generate

theoretical quantile–quantile (QQ) plots for the variable "age". The goal

is to assess how well several candidate parametric distributions fit the empirical

data.

Three commonly used continuous distributions are evaluated:

Normal distribution (

"norm")Log-normal distribution (

"lognorm")Gamma distribution (

"gamma")

Each distribution is fit to the data using the default maximum likelihood

estimation (MLE) procedure. The plot_types="qq" argument instructs the

function to generate only QQ plots, while qq_type="theoretical" specifies that

sample quantiles should be compared against quantiles derived from the fitted

theoretical distributions.

A reference identity line is included via show_reference=True, allowing for

direct visual assessment of deviations from the ideal 1:1 relationship. If the

empirical data follow a given distribution closely, the corresponding points will

align tightly along this reference line.

Distinct colors are assigned to each fitted distribution using the palette

parameter, making it easy to visually compare goodness-of-fit across models. Axis

limits are constrained using xlim and ylim to focus the comparison on a

relevant range of values and ensure consistent scaling.

The resulting figure is saved in both PNG and SVG formats using the provided output paths and filename.

from eda_toolkit import distribution_gof_plots

distribution_gof_plots(

df,

var="age",

dist=["norm", "lognorm", "gamma"],

plot_types="qq",

qq_type="theoretical",

show_reference=True,

image_path_png=image_path_png,

image_path_svg=image_path_svg,

palette={

"norm": "tab:blue",

"lognorm": "tab:orange",

"gamma": "tab:green",

},

image_filename="gof_qq_age_adult_income",

xlim=(5, 100),

ylim=(5, 100),

)

Interpretation

Points closely following the diagonal reference line indicate a strong agreement between the empirical distribution and the theoretical model.

Systematic curvature suggests skewness or variance mismatches.

Deviations in the tails highlight poor tail behavior, which is often critical in risk modeling, reliability analysis, and anomaly detection contexts.

This diagnostic provides a fast, visual comparison of multiple candidate distributions and is typically used as a first-pass validation step before making distributional assumptions in downstream modeling.

Empirical QQ Plot Example (Sample vs Reference Data)

In this example, the distribution_gof_plots function is used to generate an

empirical quantile–quantile (QQ) plot, comparing the distribution of a target

sample against a reference empirical distribution, rather than against a

theoretical model.

Unlike theoretical QQ plots, which compare sample quantiles to quantiles derived from a fitted parametric distribution, empirical QQ plots compare quantiles between two observed samples directly. This makes them particularly useful for distributional comparisons across subpopulations, cohorts, or time periods.

Here, the variable "age" is analyzed for the full dataset and compared against

a reference sample consisting only of observations where sex == "Male". The

reference data are extracted explicitly and passed via the reference_data

parameter.

Although a distribution name ("norm") is still required for labeling purposes,

no theoretical distribution is involved in the QQ geometry when

qq_type="empirical". Instead, matched empirical quantiles from the sample and

reference data are plotted against one another.

reference_data = df.loc[df["sex"] == "Male", "age"].dropna().values

from eda_toolkit import distribution_gof_plots

distribution_gof_plots(

df,

var="age",

dist="norm",

plot_types="qq",

qq_type="empirical",

reference_data=reference_data,

)

Interpretation

Points lying close to the diagonal indicate that the sample and reference distributions are similar across quantiles.

Systematic deviations from the diagonal reveal distributional shifts, such as differences in central tendency, spread, or tail behavior.

Curvature in the upper or lower quantiles highlights differences in tail behavior between the two samples.

Empirical QQ plots are especially valuable for fairness analysis, cohort comparison, and data drift detection, where direct comparison between groups is preferred over assumptions of parametric form.

CDF Plot Example (Lower and Upper Tails)

In this example, the distribution_gof_plots function is used to generate

cumulative distribution function (CDF) plots for the variable "age",

including both the lower tail (CDF) and upper tail (exceedance probability).

Two candidate parametric distributions are evaluated:

Normal distribution (

"norm")Log-normal distribution (

"lognorm")

The plot_types="cdf" argument instructs the function to produce CDF-based

diagnostics, while the default tail="both" setting ensures that both the

CDF and exceedance curves are plotted simultaneously. This dual representation

provides a complete view of the distribution’s behavior across the full range of

values.

The CDF curves illustrate the probability that the random variable takes on a

value less than or equal to a given threshold, while the exceedance curves

(1 − CDF) highlight the probability of observing values greater than that

threshold. This is especially useful for understanding upper-tail risk and

extreme-value behavior.

All plots are rendered on a linear scale, making it easier to compare overall distributional shape and mid-range behavior between candidate models.

from eda_toolkit import distribution_gof_plots

distribution_gof_plots(

df,

var="age",

dist=["norm", "lognorm"],

plot_types="cdf",

)

Interpretation

CDF curves that rise more quickly indicate distributions with greater mass at lower values.

Exceedance curves that decay more slowly suggest heavier upper tails and higher probability of extreme observations.

Differences between the normal and log-normal fits are most pronounced in the tails, where modeling assumptions often matter most.

CDF and exceedance plots are particularly valuable in risk analysis, threshold-based decision-making, and model selection, where understanding tail behavior is as important as central tendency.

Feature Scaling and Outliers

- data_doctor(df, feature_name, data_fraction=1, scale_conversion=None, scale_conversion_kws=None, apply_cutoff=False, lower_cutoff=None, upper_cutoff=None, show_plot=True, plot_type='all', figsize=(18, 6), xlim=None, kde_ylim=None, hist_ylim=None, box_violin_ylim=None, save_plot=False, image_path_png=None, image_path_svg=None, apply_as_new_col_to_df=False, kde_kws=None, hist_kws=None, box_violin_kws=None, box_violin='boxplot', label_fontsize=12, tick_fontsize=10, random_state=None)

Analyze and transform a specific feature in a DataFrame, with options for scaling, applying cutoffs, and visualizing the results. This function also allows for the creation of a new column with the transformed data if specified. Plots can be saved in PNG or SVG format with filenames that incorporate the

plot_type,feature_name,scale_conversion, andcutoffif cutoffs are applied.- Parameters:

df (pandas.DataFrame) – The DataFrame containing the feature to analyze.

feature_name (str) – The name of the feature (column) to analyze.

data_fraction (float, optional) – Fraction of the data to analyze. Default is

1(full dataset). Useful for large datasets where a sample can represent the population. Ifapply_as_new_col_to_df=True, the full dataset is used (data_fraction=1).scale_conversion (str, optional) –

Type of conversion to apply to the feature. Options include:

'abs': Absolute values'log': Natural logarithm'sqrt': Square root'cbrt': Cube root'reciprocal': Reciprocal transformation'stdrz': Standardized (z-score)'minmax': Min-Max scaling'boxcox': Box-Cox transformation (positive values only; supportslmbdafor specific lambda oralphafor confidence interval)'robust': Robust scaling (median and IQR)'maxabs': Max-abs scaling'exp': Exponential transformation'logit': Logit transformation (values between 0 and 1)'arcsinh': Inverse hyperbolic sine'square': Squaring the values'power': Power transformation (Yeo-Johnson).

scale_conversion_kws (dict, optional) –

Additional keyword arguments to pass to the scaling functions, such as:

'alpha'for Box-Cox transformation (returns a confidence interval for lambda)'lmbda'for a specific Box-Cox transformation value'quantile_range'for robust scaling.

apply_cutoff (bool, optional (default=False)) – Whether to apply upper and/or lower cutoffs to the feature.

lower_cutoff (float, optional) – Lower bound to apply if

apply_cutoff=True.upper_cutoff (float, optional) – Upper bound to apply if

apply_cutoff=True.show_plot (bool, optional (default=True)) – Whether to display plots of the transformed feature: KDE, histogram, and boxplot/violinplot.

plot_type (str, list, or tuple, optional (default="all")) –

Specifies the type of plot(s) to produce. Options are:

'all': Generates KDE, histogram, and boxplot/violinplot.'kde': KDE plot only.'hist': Histogram plot only.'box_violin': Boxplot or violin plot only (specified bybox_violin).

If a list or tuple is provided (e.g.,

plot_type=["kde", "hist"]), the specified plots are displayed in a single row with sufficient spacing. AValueErroris raised if an invalid plot type is included.figsize (tuple or list, optional (default=(18, 6))) – Specifies the figure size for the plots. This applies to all plot types, including single plots (when

plot_typeis set to “kde”, “hist”, or “box_violin”) and multi-plot layout whenplot_typeis “all”.xlim (tuple or list, optional) – Limits for the x-axis in all plots, specified as

(xmin, xmax).kde_ylim (tuple or list, optional) – Limits for the y-axis in the KDE plot, specified as

(ymin, ymax).hist_ylim (tuple or list, optional) – Limits for the y-axis in the histogram plot, specified as

(ymin, ymax).box_violin_ylim (tuple or list, optional) – Limits for the y-axis in the boxplot or violin plot, specified as

(ymin, ymax).save_plot (bool, optional (default=False)) – Whether to save the plots as PNG and/or SVG images. If

True, the user must specify at least one ofimage_path_pngorimage_path_svg, otherwise aValueErroris raised.image_path_png (str, optional) – Directory path to save the plot as a PNG file. Only used if

save_plot=True.image_path_svg (str, optional) – Directory path to save the plot as an SVG file. Only used if

save_plot=True.apply_as_new_col_to_df (bool, optional (default=False)) –

Whether to create a new column in the DataFrame with the transformed values. If

True, the new column name is generated based on the feature name and the transformation applied:<feature_name>_<scale_conversion>: If a transformation is applied.<feature_name>_w_cutoff: If only cutoffs are applied.

For Box-Cox transformation, if

alphais specified, the confidence interval for lambda is displayed. Iflmbdais specified, the lambda value is displayed.kde_kws (dict, optional) – Additional keyword arguments to pass to the KDE plot (

seaborn.kdeplot).hist_kws (dict, optional) – Additional keyword arguments to pass to the histogram plot (

seaborn.histplot).box_violin_kws (dict, optional) – Additional keyword arguments to pass to either boxplot or violinplot.

box_violin (str, optional (default="boxplot")) – Specifies whether to plot a

boxplotorviolinplotifplot_typeis set tobox_violin.label_fontsize (int, optional (default=12)) – Font size for the axis labels and plot titles.

tick_fontsize (int, optional (default=10)) – Font size for the tick labels on both axes.

random_state (int, optional) – Seed for reproducibility when sampling the data.

- Returns:

NoneDisplays the feature’s descriptive statistics, quartile information, and outlier details. If a new column is created, confirms the addition to the DataFrame. For Box-Cox, either the lambda or its confidence interval is displayed.- Raises:

If an invalid

scale_conversionis provided.If Box-Cox transformation is applied to non-positive values.

If

save_plot=Truebut neitherimage_path_pngnorimage_path_svgis provided.If an invalid option is provided for

box_violin.If an invalid option is provided for

plot_type.If the length of transformed data does not match the original feature length.

Note

When saving plots, the filename will include the

feature_name,scale_conversion, each selectedplot_type, and, if cutoffs are applied,"_cutoff". For example, iffeature_nameis"age",scale_conversionis"boxcox", andplot_typeis"kde", with cutoffs applied, the filename will be:age_boxcox_kde_cutoff.pngorage_boxcox_kde_cutoff.svg.

Available Scale Conversions

The scale_conversion parameter accepts several options for data scaling, providing flexibility in how you preprocess your data. Each option addresses specific transformation needs, such as normalizing data, stabilizing variance, or adjusting data ranges. Below is the exhaustive list of available scale conversions:

'abs': Takes the absolute values of the data, removing any negative signs.'log': Applies the natural logarithm to the data, useful for compressing large ranges and reducing skewness.'sqrt': Applies the square root transformation, often used to stabilize variance.'cbrt': Takes the cube root of the data, which can be useful for transforming both positive and negative values symmetrically.'stdrz': Standardizes the data to have a mean of 0 and a standard deviation of 1, also known as z-score normalization.'minmax': Rescales the data to a specified range, defaulting to [0, 1], ensuring that all values fall within this range.'boxcox': Applies the Box-Cox transformation to stabilize variance and make the data more normally distributed. Only works with positive values and supports passinglmbdaoralphafor flexibility.'robust': Scales the data based on percentiles (such as the interquartile range), which reduces the influence of outliers.'maxabs': Scales the data by dividing it by its maximum absolute value, preserving the sign of the data while constraining it to the range [-1, 1].'reciprocal': Transforms the data by taking the reciprocal (1/x), which is useful when handling values that are far from zero.'exp': Applies the exponential function to the data, which is useful for modeling exponential growth or increasing the impact of large values.'logit': Applies the logit transformation to data, which is only valid for values between 0 and 1. This is typically used in logistic regression models.'arcsinh': Applies the inverse hyperbolic sine transformation, which is similar to the logarithm but can handle both positive and negative values.'square': Squares the values of the data, effectively emphasizing larger values while downplaying smaller ones.'power': Applies the power transformation (Yeo-Johnson), which is similar to Box-Cox but works for both positive and negative values.

boxcox is just one of the many options available for transforming data in the data_doctor function, providing versatility to handle different scaling needs.

Box-Cox Transformation Example 1

In this example from the US Census dataset [1], we demonstrate the usage of the data_doctor

function to apply a Box-Cox transformation to the age column in a DataFrame.

The data_doctor function provides a flexible way to preprocess data by applying

various scaling techniques. In this case, we apply the Box-Cox transformation without any tuning

of the alpha or lambda parameters, allowing the function to handle the transformation in a

barebones approach. You can also choose other scaling conversions from the list of available

options (such as 'minmax', 'standard', 'robust', etc.), depending on your needs.

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name="age",

data_fraction=0.6,

scale_conversion="boxcox",

apply_cutoff=False,

lower_cutoff=None,

upper_cutoff=None,

show_plot=True,

apply_as_new_col_to_df=True,

random_state=111,

)

DATA DOCTOR SUMMARY REPORT

+------------------------------+--------------------+

| Feature | age |

+------------------------------+--------------------+

| Statistic | Value |

+------------------------------+--------------------+

| Min | 3.6664 |

| Max | 6.8409 |

| Mean | 5.0163 |

| Median | 5.0333 |

| Std Dev | 0.6761 |

+------------------------------+--------------------+

| Quartile | Value |

+------------------------------+--------------------+

| Q1 (25%) | 4.5219 |

| Q2 (50% = Median) | 5.0333 |

| Q3 (75%) | 5.5338 |

| IQR | 1.0119 |

+------------------------------+--------------------+

| Outlier Bound | Value |

+------------------------------+--------------------+

| Lower Bound | 3.0040 |

| Upper Bound | 7.0517 |

+------------------------------+--------------------+

New Column Name: age_boxcox

Box-Cox Lambda: 0.1748

df.head()

| age | workclass | education | education-num | marital-status | occupation | relationship | age_boxcox | |

|---|---|---|---|---|---|---|---|---|

| census_id | ||||||||

| 582248222 | 39 | State-gov | Bachelors | 13 | Never-married | Adm-clerical | Not-in-family | 5.180807 |

| 561810758 | 50 | Self-emp-not-inc | Bachelors | 13 | Married-civ-spouse | Exec-managerial | Husband | 5.912323 |

| 598098459 | 38 | Private | HS-grad | 9 | Divorced | Handlers-cleaners | Not-in-family | 5.227960 |

| 776705221 | 53 | Private | 11th | 7 | Married-civ-spouse | Handlers-cleaners | Husband | 6.389562 |

| 479262902 | 28 | Private | Bachelors | 13 | Married-civ-spouse | Prof-specialty | Wife | 3.850675 |

Note

Notice that the unique identifiers function was also applied on the dataframe to generate randomized census IDs for the rows of the data.

Explanation

df=df: We are passingdfas the input DataFrame.feature_name="age": The feature we are transforming isage.data_fraction=1: We are using 100% of the data in theagecolumn. You can adjust this if you want to perform the operation on a subset of the data.scale_conversion="boxcox": This parameter defines the type of scaling we want to apply. In this case, we are using the Box-Cox transformation. You can changeboxcoxto any supported scale conversion method.apply_cutoff=False: We are not applying any outlier cutoff in this example.lower_cutoff=Noneandupper_cutoff=None: These are left asNonesince we are not applying outlier cutoffs in this case.show_plot=True: This option will generate a plot to visualize the distribution of theagecolumn before and after the transformation.apply_as_new_col_to_df=True: This tells the function to apply the transformation and create a new column in the DataFrame. The new column will be namedage_boxcox, where"boxcox"indicates the type of transformation applied.

Box-Cox Transformation: This transformation normalizes the data by making the distribution more Gaussian-like, which can be beneficial for certain statistical models.

No Outlier Handling: In this example, we are not applying any cutoffs to remove or modify outliers. This means the function will process the entire range of values in the

agecolumn without making adjustments for extreme values.New Column Creation: By setting

apply_as_new_col_to_df=True, a new column namedage_boxcoxwill be created in thedfDataFrame, where the transformed values will be stored. This allows us to keep the originalagecolumn intact while adding the transformed data as a new feature.The

show_plot=Trueparameter will generate a plot that visualizes the distribution of the originalagedata alongside the transformedage_boxcoxdata. This can help you assess how the Box-Cox transformation has affected the data distribution.

Box-Cox Transformation Example 2

In this second example from the US Census dataset [1], we apply the Box-Cox

transformation to the age column in a DataFrame, but this time with custom

keyword arguments passed through the scale_conversion_kws. Specifically, we

provide an alpha value of 0.8, influencing the confidence interval for the

transformation. Additionally, we customize the

visual appearance of the plots by specifying keyword arguments for the violinplot,

KDE, and histogram plots. These customizations allow for greater control over the

visual output.

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name="age",

data_fraction=1,

scale_conversion="boxcox",

apply_cutoff=False,

lower_cutoff=None,

upper_cutoff=None,

show_plot=True,

apply_as_new_col_to_df=True,

scale_conversion_kws={"alpha": 0.8},

box_violin="violinplot",

box_violin_kws={"color": "lightblue"},

kde_kws={"fill": True, "color": "blue"},

hist_kws={"color": "green"},

random_state=111,

)

DATA DOCTOR SUMMARY REPORT

+------------------------------+--------------------+

| Feature | age |

+------------------------------+--------------------+

| Statistic | Value |

+------------------------------+--------------------+

| Min | 3.6664 |

| Max | 6.8409 |

| Mean | 5.0163 |

| Median | 5.0333 |

| Std Dev | 0.6761 |

+------------------------------+--------------------+

| Quartile | Value |

+------------------------------+--------------------+

| Q1 (25%) | 4.5219 |

| Q2 (50% = Median) | 5.0333 |

| Q3 (75%) | 5.5338 |

| IQR | 1.0119 |

+------------------------------+--------------------+

| Outlier Bound | Value |

+------------------------------+--------------------+

| Lower Bound | 3.0040 |

| Upper Bound | 7.0517 |

+------------------------------+--------------------+

New Column Name: age_boxcox

Box-Cox C.I. for Lambda: (0.1717, 0.1779)

Note

Note that this example specifies The theoretical overview section provides a detailed framework for a Box-Cox transformation.

df.head()

| age | workclass | education | education-num | marital-status | occupation | relationship | age_boxcox | |

|---|---|---|---|---|---|---|---|---|

| census_id | ||||||||

| 582248222 | 39 | State-gov | Bachelors | 13 | Never-married | Adm-clerical | Not-in-family | 3.936876 |

| 561810758 | 50 | Self-emp-not-inc | Bachelors | 13 | Married-civ-spouse | Exec-managerial | Husband | 4.019590 |

| 598098459 | 38 | Private | HS-grad | 9 | Divorced | Handlers-cleaners | Not-in-family | 4.521908 |

| 776705221 | 53 | Private | 11th | 7 | Married-civ-spouse | Handlers-cleaners | Husband | 5.033257 |

| 479262902 | 28 | Private | Bachelors | 13 | Married-civ-spouse | Prof-specialty | Wife | 5.614411 |

In this example, you can see how the data_doctor function supports further

flexibility with customizable plot aesthetics and scaling techniques. The

Box-Cox transformation is still applied without any tuning of the lambda parameter,

while the alpha value provides a confidence interval for the resulting transformation:

Box-Cox C.I. for Lambda: (0.1717, 0.1779)

This allows for tailored visualizations with consistent styling across multiple plot types.

Some of the keyword arguments, such as those passed in box_violin_kws, are

specific to Python version 3.7. For example, in this version, we remove the fill

color from the boxplot using boxprops.

box_violin_kws={

"boxprops": dict(facecolor="none", edgecolor="blue")

},

In later Python versions (e.g., 3.11),

this can be done more easily with fill=True. Therefore, it is important to pass

any desired keyword arguments based on the correct version of Python you’re using.

Explanation

df=df: We are passingdfas the input DataFrame.feature_name="age": The feature we are transforming isage.data_fraction=1: We are using 100% of the data in theagecolumn. You can adjust this if you want to perform the operation on a subset of the data.scale_conversion="boxcox": This parameter defines the type of scaling we want to apply. In this case, we are using the Box-Cox transformation.apply_cutoff=False: We are not applying any outlier cutoff in this example.lower_cutoff=Noneandupper_cutoff=None: These are left asNonesince we are not applying outlier cutoffs in this case.show_plot=True: This option will generate a plot to visualize the distribution of theagecolumn before and after the transformation.apply_as_new_col_to_df=True: This tells the function to apply the transformation and create a new column in the DataFrame. The new column will be namedage_boxcox_alphato indicate that an alpha parameter was used in the transformation.scale_conversion_kws={"alpha":0.8}: Thealphakeyword argument specifies the confidence interval for the Box-Cox transformation’s lambda value, ensuring a confidence interval is returned instead of a single lambda value.box_violin_kws={"boxprops": dict(facecolor='none', edgecolor="blue")}: This keyword argument customizes the appearance of the boxplot by removing the fill color and setting the edge color to blue. This syntax is specific to Python 3.7. In later versions (i.e., 3.11+), thefill=Trueargument can be used to control this behavior.kde_kws={"fill":True, "color":"blue"}: This fills the area under the KDE plot with a blue color, enhancing the plot’s visual presentation.hist_kws={"color":"blue"}: This colors the histogram bars in blue for visual consistency across plots.image_path_svg=image_path_svg: This parameter specifies the path where the resulting plot will be saved as an SVG file.save_plot=True: This tells the function to save the plot, and since an image path is provided, the plot will be saved as an SVG file.

Box-Cox Transformation with Confidence Interval: In this example, we use the Box-Cox transformation with the

alphaparameter set to 0.8, which returns a confidence interval for the lambda value rather than a single value.No Outlier Handling: Similar to Example 1, no outliers are handled in this transformation.

New Column Creation: The transformed data is added to the DataFrame in a new column named

age_boxcox_alpha, where “alpha” indicates the confidence interval applied in the Box-Cox transformation.Custom Plot Visuals: The KDE, histogram, and boxplot are customized with blue colors, and specific keyword arguments are provided for the boxplot appearance based on Python version. These changes allow for finer control over the visual aesthetics of the resulting plots.

Plot Saving: The

save_plotparameter is set toTrue, and the plot will be saved as an SVG file at the specified location.

Data Fraction Usage

In the Box-Cox transformation examples, you may notice a difference in the values for data_fraction:

In Box-Cox Example 1, we set

data_fraction=0.6.In Box-Cox Example 2, we used the full data with

data_fraction=1.

Despite using a data_fraction of 0.6 in Example 1, the function still processed

the entire dataset. The purpose of the data_fraction parameter is to allow

users to select a smaller subset of the data for sampling and transformation while

ensuring the final operation is applied to the full scope of data.

This behavior is intentional, as it serves to:

1. Ensure Reproducibility: By using a consistent random_state, the sampled

subset can reliably represent the dataset, regardless of data_fraction.

2. Preserve Sampling Assumptions: Applying the desired operation (e.g., transformations) on the full data aligns the sample with the larger population and allows a seamless projection of the sample properties to the entire dataset.

Thus, while data_fraction provides a way to adjust the percentage of data

used for sampling, the function will always apply the transformation across the

full dataset, balancing performance efficiency with statistical integrity.

Retaining a Sample for Analysis

To sample the exact subset used in the data_fraction=0.6 calculation, you

can directly sample from the DataFrame with a consistent random state for

reproducibility. This method allows you to work with a representative subset of

the data while preserving the original distribution characteristics.

To sample 60% of the data using the exact logic of the data_doctor function,

use the following code:

sampled_df = df.sample(frac=0.6, random_state=111)

The random_state parameter ensures that the sampled data remains consistent

across runs. After creating this subset, you can apply the data_doctor

function to sampled_df as shown below to perform the Box-Cox transformation

on the age column:

from eda_toolkit import data_doctor

data_doctor(

df=sampled_df,

feature_name="age",

data_fraction=1,

scale_conversion="boxcox",

apply_cutoff=False,

lower_cutoff=None,

upper_cutoff=None,

show_plot=True,

apply_as_new_col_to_df=True,

random_state=111,

)

By setting data_fraction=1 within the data_doctor function, you ensure

that it operates on the entire sampled_df, which now consists of the selected

60% subset. To confirm that the sampled data is indeed 60% of the original

DataFrame, you can print the shape of sampled_df as follows:

print(

f"The sampled dataframe has {sampled_df.shape[0]} rows and {sampled_df.shape[1]} columns."

)

The sampled dataframe has 29305 rows and 16 columns.

We can also inspect the first five rows of the sampled_df dataframe below:

| age | workclass | education | education-num | marital-status | occupation | relationship | age_boxcox | |

|---|---|---|---|---|---|---|---|---|

| census_id | ||||||||

| 408117383 | 40 | Private | Some-college | 10 | Married-civ-spouse | Machine-op-inspct | Husband | 4.355015 |

| 669717925 | 58 | Private | HS-grad | 9 | Married-civ-spouse | Exec-managerial | Husband | 5.086108 |

| 399428377 | 41 | Private | HS-grad | 9 | Separated | Machine-op-inspct | Not-in-family | 5.037743 |

| 961427355 | 73 | NaN | Some-college | 10 | Married-civ-spouse | NaN | Husband | 4.216561 |

| 458295720 | 19 | Private | HS-grad | 9 | Never-married | Farming-fishing | Not-in-family | 5.520438 |

Logit Transformation Example

In this example, we demonstrate the usage of the data_doctor function to

apply a logit transformation to a feature in a DataFrame. The logit transformation

is used when dealing with data bounded between 0 and 1, as it maps values within

this range to an unbounded scale in log-odds terms, making it particularly useful

in fields such as logistic regression.

Note

The data_doctor function provides a range of scaling options, and in this case,

we use the logit transformation to illustrate how the transformation is applied.

However, it’s important to note that if the feature contains values outside the (0, 1)

range, the function will raise a ValueError. This is because the logit function

is undefined for values less than or equal to 0 and greater than or equal to 1.

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name="age",

data_fraction=1,

scale_conversion="logit",

apply_cutoff=False,

lower_cutoff=None,

upper_cutoff=None,

show_plot=True,

apply_as_new_col_to_df=True,

random_state=111,

)

Error

ValueError: Logit transformation requires values to be between 0 and 1. Consider using a scaling method such as min-max scaling first.

If you attempt to apply this transformation to data outside the (0, 1) range, such as an unscaled numerical feature, the function will halt and display an error message advising you to use an appropriate scaling method first.

If you encounter this error, it is recommended to first scale your data using a method like min-max scaling to bring it within the (0, 1) range before applying the logit transformation.

In this example:

df=df: Specifies the DataFrame containing the feature.feature_name="feature_proportion": The feature we are transforming should be bounded between 0 and 1.scale_conversion="logit": Sets the transformation to logit. Ensure thatfeature_proportionvalues are within (0, 1) before applying.show_plot=True: Generates a plot of the transformed feature.

Plain Outliers Example

Observed Outliers Sans Cutoffs

In this example, we examine the final weight (fnlwgt) feature from the US Census

dataset [1], focusing on detecting outliers without applying any scaling

transformations. The data_doctor function is used with minimal configuration

to visualize where outliers are present in the raw data.

By enabling apply_cutoff=True and selecting plot_type=["box_violin", "hist"],

we can clearly identify outliers both visually and numerically. This basic setup

highlights the outliers without altering the data distribution, making it easy

to see extreme values that could affect further analysis.

The following code demonstrates this:

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name="fnlwgt",

data_fraction=0.6,

plot_type=["box_violin", "hist"],

hist_kws={"color": "gray"},

figsize=(8, 4),

image_path_svg=image_path_svg,

save_plot=True,

random_state=111,

)

DATA DOCTOR SUMMARY REPORT

+------------------------------+--------------------+

| Feature | fnlwgt |

+------------------------------+--------------------+

| Statistic | Value |

+------------------------------+--------------------+

| Min | 12,285.0000 |

| Max | 1,484,705.0000 |

| Mean | 189,181.3719 |

| Median | 177,955.0000 |

| Std Dev | 105,417.5713 |

+------------------------------+--------------------+

| Quartile | Value |

+------------------------------+--------------------+

| Q1 (25%) | 117,292.0000 |

| Q2 (50% = Median) | 177,955.0000 |

| Q3 (75%) | 236,769.0000 |

| IQR | 119,477.0000 |

+------------------------------+--------------------+

| Outlier Bound | Value |

+------------------------------+--------------------+

| Lower Bound | -61,923.5000 |

| Upper Bound | 415,984.5000 |

+------------------------------+--------------------+

In this visualization, the boxplot and histogram display outliers prominently, showing you exactly where the extreme values lie. This setup serves as a baseline view of the raw data, making it useful for assessing the initial distribution before any scaling or transformation is applied.

Treated Outliers With Cutoffs

In this scenario, we address the extreme values observed in the fnlwgt feature

by applying a visual cutoff based on the distribution seen in the previous example.

Here, we set an approximate upper cutoff of 400,000 to limit the impact of outliers

without any additional scaling or transformation. By using apply_cutoff=True along

with upper_cutoff=400000, we effectively cap the extreme values.

This example also demonstrates how you can further customize the visualization by

specifying additional histogram keyword arguments with hist_kws. Here, we use

bins=20 to adjust the bin size, creating a smoother view of the feature’s

distribution within the cutoff limits.

In the resulting visualization, you will see that the boxplot and histogram have a controlled range due to the applied upper cutoff, limiting the influence of extreme outliers on the visual representation. This treatment provides a clearer view of the primary distribution, allowing for a more focused analysis on the bulk of the data without outliers distorting the scale.

The following code demonstrates this configuration:

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name="fnlwgt",

data_fraction=0.6,

apply_as_new_col_to_df=True,

apply_cutoff=True,

upper_cutoff=400000,

plot_type=["box_violin", "hist"],

hist_kws={"color": "gray", "bins": 20},

figsize=(8, 4),

image_path_svg=image_path_svg,

save_plot=True,

random_state=111,

)

| age | workclass | fnlwgt | education | marital-status | occupation | relationship | fnlwgt_w_cutoff | |

|---|---|---|---|---|---|---|---|---|

| census_id | ||||||||

| 582248222 | 39 | State-gov | 77516 | Bachelors | Never-married | Adm-clerical | Not-in-family | 132222 |

| 561810758 | 50 | Self-emp-not-inc | 83311 | Bachelors | Married-civ-spouse | Exec-managerial | Husband | 68624 |

| 598098459 | 38 | Private | 215646 | HS-grad | Divorced | Handlers-cleaners | Not-in-family | 161880 |

| 776705221 | 53 | Private | 234721 | 11th | Married-civ-spouse | Handlers-cleaners | Husband | 73402 |

| 479262902 | 28 | Private | 338409 | Bachelors | Married-civ-spouse | Prof-specialty | Wife | 97261 |

RobustScaler Outliers Example

In this example from the US Census dataset [1], we apply the RobustScaler

transformation to the age column in a DataFrame to address potential outliers.

The data_doctor function enables users to apply transformations with specific

configurations via the scale_conversion_kws parameter, making it ideal for

refining how outliers affect scaling.

For this example, we set the following custom keyword arguments:

Disable centering: By setting

with_centering=False, the transformation scales based only on the range, without shifting the median to zero.Adjust quantile range: We specify a narrower

quantile_rangeof (10.0, 90.0) to reduce the influence of extreme values on scaling.

The following code demonstrates this transformation:

from eda_toolkit import data_doctor

data_doctor(

df=df,

feature_name='age',

data_fraction=0.6,

scale_conversion="robust",

apply_as_new_col_to_df=True,

scale_conversion_kws={

"with_centering": False, # Disable centering

"quantile_range": (10.0, 90.0) # Use a custom quantile range

},

random_state=111,

)

DATA DOCTOR SUMMARY REPORT

+------------------------------+--------------------+

| Feature | age |

+------------------------------+--------------------+

| Statistic | Value |

+------------------------------+--------------------+

| Min | 0.4722 |

| Max | 2.5000 |

| Mean | 1.0724 |

| Median | 1.0278 |

| Std Dev | 0.3809 |

+------------------------------+--------------------+

| Quartile | Value |

+------------------------------+--------------------+

| Q1 (25%) | 0.7778 |

| Q2 (Median) | 1.0278 |

| IQR | 0.5556 |

| Q3 (75%) | 1.3333 |

| Q4 (Max) | 2.5000 |

+------------------------------+--------------------+

| Outlier Bound | Value |

+------------------------------+--------------------+

| Lower Bound | -0.0556 |

| Upper Bound | 2.1667 |

+------------------------------+--------------------+

New Column Name: age_robust

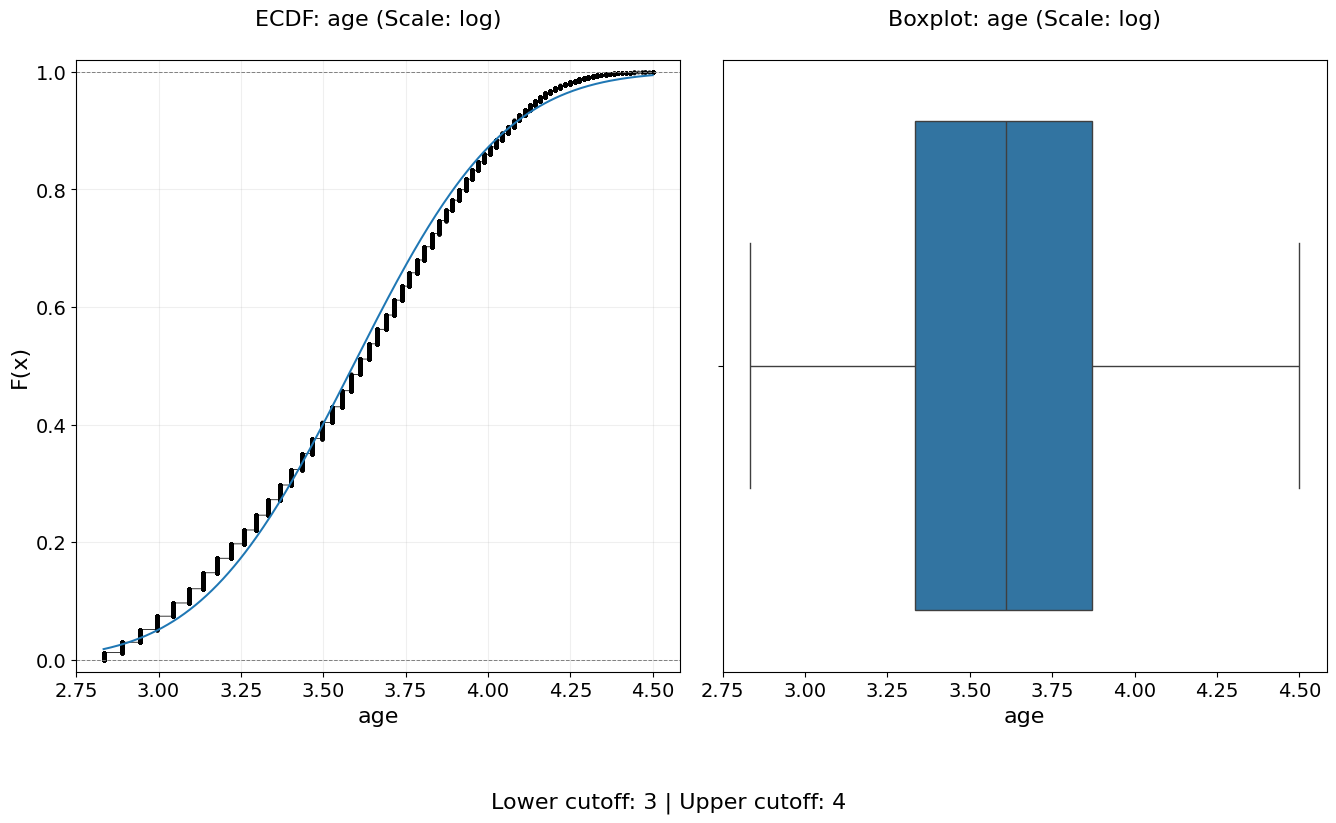

ECDF Distribution Example

In this example, we demonstrate the use of the Empirical Cumulative Distribution

Function (ECDF) to visualize the distribution of the "age" variable from the

US Census Adult Income dataset [1].

ECDF plots provide a robust, non-parametric view of a variable’s distribution by showing the cumulative proportion of observations less than or equal to a given value. Unlike histograms or kernel density estimates, ECDFs do not rely on binning or smoothing parameters, making them especially useful for diagnostic analysis.

For a formal mathematical definition and theoretical background of the ECDF, see the Empirical Cumulative Distribution Function (ECDF) section in the theoretical overview.

This example uses the data_doctor function to generate multiple complementary

distribution views for the same feature:

Kernel Density Estimate (

"kde")Empirical Cumulative Distribution Function (

"ecdf")Box and violin plots (

"box_violin")

In addition, a logarithmic scaling transformation is applied to the "age"

feature via scale_conversion="log", allowing the cumulative behavior of the

distribution to be examined after compressing the right tail.

The following code demonstrates this workflow:

from eda_toolkit import data_doctor

print("\nRunning data_doctor with plot_type=['kde', 'ecdf', 'box_violin'] ...\n")

data_doctor(

df=df,

feature_name="age",

plot_type=["kde", "ecdf", "box_violin"],

scale_conversion="log",

)

Running data_doctor with plot_type=['kde', 'ecdf', 'box_violin'] ...

DATA DOCTOR SUMMARY REPORT

+------------------------------+--------------------+

| Feature | age |

+------------------------------+--------------------+

| Statistic | Value |

+------------------------------+--------------------+

| Min | 2.8332 |

| Max | 4.4998 |

| Mean | 3.5905 |

| Median | 3.6109 |

| Std Dev | 0.3617 |

+------------------------------+--------------------+

| Quartile | Value |

+------------------------------+--------------------+

| Q1 (25%) | 3.3322 |

| Q2 (50% = Median) | 3.6109 |

| Q3 (75%) | 3.8712 |

| IQR | 0.5390 |

+------------------------------+--------------------+

| Outlier Bound | Value |

+------------------------------+--------------------+

| Lower Bound | 2.5237 |

| Upper Bound | 4.6797 |

+------------------------------+--------------------+

Stacked Crosstab Plots

Generate stacked or regular bar plots and crosstabs for specified columns in a DataFrame.

The stacked_crosstab_plot function is a powerful tool for visualizing categorical data relationships through stacked bar plots and contingency tables (crosstabs). It supports extensive customization options, including plot appearance, color schemes, and saving output in multiple formats. Users can choose between regular or normalized plots and control whether the function returns the generated crosstabs as a dictionary.

- stacked_crosstab_plot(df, col, func_col, legend_labels_list, title, kind='bar', width=0.9, rot=0, custom_order=None, image_path_png=None, image_path_svg=None, save_formats=None, color=None, output='both', return_dict=False, x=None, y=None, p=None, file_prefix=None, logscale=False, plot_type='both', show_legend=True, legend_loc='best', label_fontsize=12, tick_fontsize=10, text_wrap=50, remove_stacks=False, xlim=None, ylim=None)

- Parameters:

df (pandas.DataFrame) – The DataFrame containing the data to plot.

col (str) – The name of the column in the DataFrame to be analyzed.

func_col (list of str) – List of columns in the DataFrame to generate the crosstabs and stack the bars in the plot.

legend_labels_list (list of list of str) – List of legend labels corresponding to each column in

func_col.title (list of str) – List of titles for each plot generated.

kind (str, optional) – Type of plot to generate (